TMA4160 Lecture log

This log will contain rough notes from the lecture, sketching the important results and arguments.

8/10: We briefly discussed discrete logarithms in E(F), summary: for general curves Pohlig-Hellman, Shanks and Pollard's ρ all work well, but index calculus-type algorithms seem not to work; for special curves it is sometimes (very) easy to compute discrete logarithms.

We discussed non-adjacent forms and point multiplication (how to compute aP for some integer a and point P), and point compression.

Finally, we had a brief demonstration of computing in Maple. This worksheet contains some code to work with elliptic curves.

1/10, 7/10: Let F be a field with characteristic different from 2 and 3. An algebraic plane curve is the zeros of a polynomial C ∈ F[X,Y], i.e. C(F) = { (x,y) ∈ F×F | C(x,y) = 0 }. Conventionally, we write the curve not as C, but as C = 0. A curve is smooth if it has a unique tangent everywhere.

An elliptic curve E is a smooth curve of the form y² = x³ + Ax + B. To get what we want, we also add a point at infinity O, and postulate that this point is on the curve. Next, we define an addition operation on the points on the curve, as follows:

(i) O is the identity; (ii) the point P = (x,y) has inverse -P = (x,-y), and P+(-P) = O; (iii) if P and Q are distinct non-zero points and P ≠ -Q, then the line through P and Q intersects E in a third point R (not necessarily distinct from P and Q), and P+Q will be -R; (iv) if P and Q are the same, then we use the tangent through P and proceed as for (iii).

It is possible to show that this operation makes the elliptic curve into an abelian group, and that there are practical formulae for computing the coordinates of P+Q in cases (iii) and (iv).

When F is a finite field of size q, E(F) will be a finite group. It may be non-cyclic, but two points are sufficient to span the group, i.e. E(F) is isomorphic to Zn×Zm, where n divides both m and q-1. Furthermore, the number of points on the curve is q+1-t, where |t| ≤ 2√q.

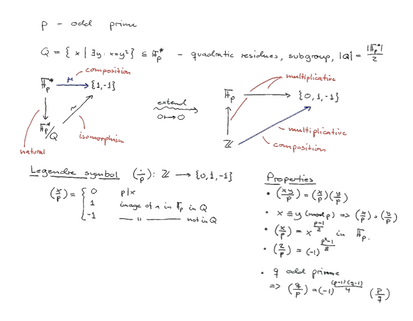

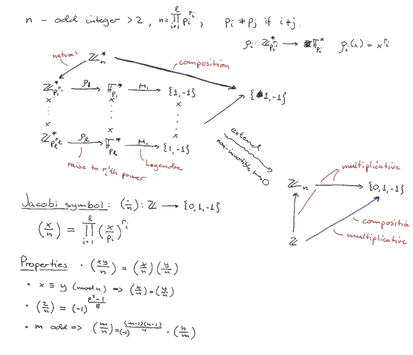

23/9, 24/9: We first discussed the Legendre and Jacobi symbols:

The important thing is that we can use the Jacobi symbol's properties to easily compute the Jacobi symbol without knowing the factorisation of n.

The prime number theorem says that the prime numbers are sufficiently "dense" among the integers that if we pick a random number from some range, the probability that it is prime is fairly large. In fact, if we can recognize primes, we can find primes simply by selecting random numbers until we see a prime.

How do we recognize a prime? Suppose we can build an algorithm that (i) proves that a composite number is composite with probability α, (ii) never claims that a prime number is composite, and (iii) each run of the algorithm is independent of the others. If α is reasonably big, we can run the algorithm k times: if our number is a prime, it will never be proven composite; if our number is composite, it will be proven composite with probability 1-(1-α)^k, which will quickly approach 1 as k grows.

Our strategy for such an algorithm is as follows: First we identify a subgroup G of Zn* such that (i) if n is prime, G = Zn*, but if n is composite, G is a proper subgroup; and (ii) we can easily recognize elements of G. Then we sample a random x in Zn* and see if it is in the subgroup. If it is not, we have proven n composite.

The first subgroup we tried is identified by x^(n-1) = 1, but unfortunately, if n is a Carmichael number, then G = Zn* and our method does not work.

The second subgroup is identified by by comparing x^( (n-1) / 2) to the Jacobi symbol (x / n). If n is prime, this will always be the case, but if n is composite, this does not always hold (see ex. 5.22 in Stinson). Therefore, we can reliably recognize primes and therefore find primes.

16/9, 17/9: We first studied how to compute discrete logarithms in prime-ordered groups, |G| = p. The first method is Shanks' baby-step giant-step algorithm. Two ideas: by building a table of pairs (g^i, i), 0 ≤ i < L and sorting it on the first coordinate, we have a lookup table that allows us to compute discrete logarithms efficiently if they are below L. Also: we can write log x = q L + r, and by comparing x, xg^(-L), xg^(-2L), etc. against our table, we will eventually arrive at xg^(-qL) = g^r, from which we easily compute the discrete logarithm of x.

The time required for this is "roughly" L + p/L, and the optimal value is L = sqrt(p).

The second method is Pollard's ρ. First idea: we have a "random-looking" function f: G → G and a corresponding function g: Zp × Zp → Zp × Zp such that if f( x^a g^b) = z and g(a, b) = (c, d), then z = x^c g^d. Now we iterate the f and g functions in two different ways, from the same starting point: the slow way uses s = f(s) and (r,u) = g(r, u), the fast way uses t = f(f(t)) and (v,w) = g(g(v, w)). We expect that eventually (after approximately sqrt(p) iterations, the two iterates collide: s = t, but (r,u) ≠ (v, w).

This gives us x^r g^u = x^v g^w, or x^(r - v) = g^(w-u), or x = g^( (w-u)/(r-v)), or log x = (w-u) / (r-v).

Shanks' and Pollard's ρ both work for arbitrary groups. Index-calculus methods do not in general work for arbitrary groups.

Observe that for an element z in a finite field Fp, we can choose a canonical representative in Z. If we have a bunch of small primes, we can sometimes express the integer representative as a product of powers of these primes. The natural map from Z down to Fp then maps this product into the finite field, giving us a relation z = ∏ p_i^(r_i). This is relatively easy to do, and there are quite a lot of such relations. (There is no similar concept for general groups!)

By trying many different z of the form g^r, we can find many relations of the form g^(r_j) = ∏ p_i^(r_ij). These give us equations r_j = ∑ r_ij log p_i in Z(p-1), and if we have enough of these, we can solve for the unknown log p_i.

Then we find a relation x g^s = ∏ p_i^s_i, giving the equation (log x) + s = ∑ s_i log p_i, from which we easily recover log x.

It can be shown that this method is significantly faster than Shanks' or Pollard's ρ methods if p-1 has a sufficiently large prime divisor.

10/9: We continued looking at the group structure of cyclic groups of prime-power order, that is, |G| = p^l for some prime p. This group has a subgroup of order p, and we shall be computing discrete logarithms there instead of in G. We can write the p-adic expansion of the discrete logarithm, that is log x = r0 + r1 p + r2 p² + … + r(l-1) p^(l-1). The ri are the "digits" of the logarithm, and we shall find them one at a time.

First, we compute x^(p^(l-1)) = g^(r0 p^(l-1)) = (g^(p^(l-1)))^r0. This has order at most p - we have moved "part of" x - the one corresponding to the first digit of the logarithm - into the subgroup of order p. Now we compute the discrete logarithm in this subgroup, using g^(p^(l-1)) as base, to find r0, the first digit.

Next, we compute x1 = x g^(-r0) and x^(p^(l-2)) = (g^(p^(l-1)))^r1. Now we have removed the first digit and moved the second digit into the subgroup of order p, where we can find it by computing the discrete logarithm.

Proceeding like this, we compute all l digits and recover the full discrete logarithm by computing l discrete logarithms in the subgroup.

Again, the cryptographic significance is that it is essentially no harder to compute discrete logarithms in G than it is to compute discrete logarithms in the subgroup of order p.

9/9: We started with the Pohlig-Hellman algorithm for computing discrete logarithms. This algorithm exploits the group structure of cyclic groups to simplify the computation. If we have a cyclic group G of order ab, where a and b are relatively prime, G is isomorphic to the direct product of two subgroups Ga and Gb, where Ga and Gb contain all the elements of order dividing a and b, respectively. For suitable exponents ea and eb, we get an isomorphism by mapping x to the tuple (x^ea,x^eb). The inverse map takes (y,z) to yz.

Let r be the discrete logarithm of x to the base g, i.e. x = g^r. It is easy to show that the discrete logarithm of x^ea to the base g^ea is congruent to r modulo a.

The idea is now to compute discrete logarithms in the smaller groups Ga and Gb, giving us congruences r ≡ ra (mod a) and r ≡ rb (mod b). Then we use the Chinese remainder theorem to recover r.

Recall that the discrete logarithm to the base g is essentially an isomorphism G → Zab taking g to 1. What we have done above is to compute a chain of isomorphisms G → Ga × Gb → Za × Zb → Zab, where the first is x → (x^ea,x^eb), the second is (y,z) → (log y, log z) using the appropriate bases, and the last is CRT. The composition of these isomorphisms is equal to the isomorphism log: G → Zab, but easier to compute.

The cryptographic significance is that it is essentially no harder to compute discrete logarithms in G than it is to compute discrete logarithms in Ga and Gb.

3/9: We redid the argument that counter mode is secure. The argument goes as follows. A block cipher f: K → Perm(A) is secure if the permutation selected by a random key "looks like" a random permutation.

In Counter mode, we evaluate the permutation at (say) l distinct points and add the result to the plaintext. If the permutation "looks" random, this is the same as selecting l distinct values at random. We really want this to look like l values chosen independently at random. Since we know there will be no repeated values, the values are not independent. Does it matter?

We know (birthday paradox) that when we select l points from a set of size n, the probability that we select distinct points is at most exp(-l² / n). If l is much smaller than n, this probability is small. The conclusion: as long as we do not encrypt too much, counter mode produces random-looking strings.

The next topic was message authentication codes, or MACs. A MAC is a function g: K → Map(A^l, T). For a random key k, the function g(k) should be hard to predict, even if you have seen it evaluated for a number of distinct messages.

We discussed a simple example based on polynomial evaluation. Suppose A=F for some finite field F of order q. Consider the map from F^l into F[x] taking m = (m0, m1, …, m(l-1)) to p_m(x) = m0 x + m1 x² + … + m(l-1) x^l.

The first construction uses K = F×F: and g(r,s)(m) = p_m(r) + s. We proved that if you have only seen g(r,s) evaluated at a single point, it is impossible to predict its value at any other point, as long as l is small compared to q. This follows from the fact that for every value z and message m, there are exactly q pairs (r,s) such that g(r,s)(m) = z (for any value r, there is exactly one choice of s that fits the requirements). But if we try to guess a value z' for a message m', there are very few pairs that also satisfy g(r,s)(m') = z' (we see this by considering the zeros of the polynomial p_(m-m')(x)-(z-z'), there are at most l of them).

The second construction uses a block cipher: g(r,s)(m) = f(s)(p_m(r)). The block cipher hides the evaluation of the polynomial, and we argued that this was secure as well, as long as the number of function evaluations was small compared to q.